Computer Vision to Secure Your Surroundings with AI/ML Smart City Solution Built using Open Source Tools at the Edge

Written by Neethu Elizabeth Simon and Samantha Coyle

As Internet of Things (IoT) is coming to life across many application areas in a smart city, the number of “things” being installed across various areas of a smart city is growing as new services are supported. A camera can be considered as an ultimate "thing" as a sensing device that generates enormous amounts of data for a large number of smart city services and applications. Fast and low-cost computation is supporting the growth of IoT in smart city deployment, AI and Computer Vision (CV)-based solutions in several fields are emerging as smart cities grow. As a practical example, security solutions aiding situational awareness of the surrounding have benefits in keeping assets and public safe throughout a smart city. However, these solutions are increasingly difficult to develop and deploy due to resource constraints, hardware costs, security concerns, and high inference loads on edge devices. Our team developed a CV-based Security-as-a-Service Solution using AI/ML providing a framework and processing pipeline for deploying an AI-assisted, multi-camera Smart City Solution of vehicular and walkway traffic make mobility smarter and safer for everyone. This article illustrates this situational awareness solution covering architecture, learnings, and challenges encountered during its design and implementation. We will also discuss ethical concerns that drove our moral compass in developing CV solutions.

Architecture

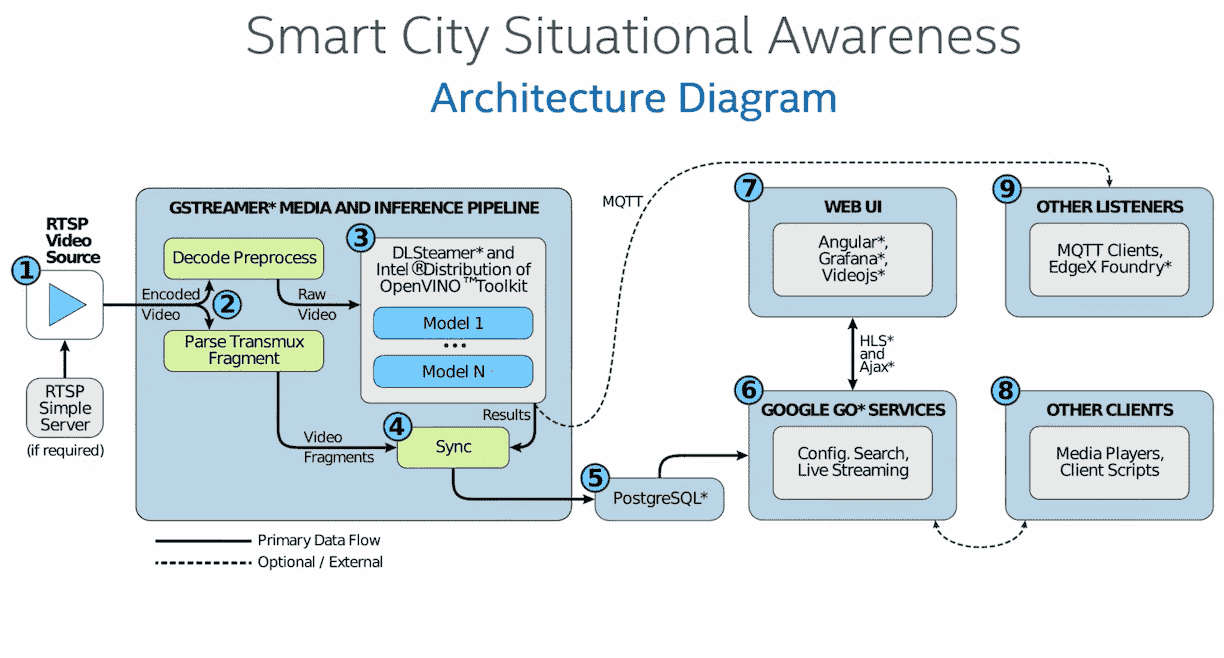

This implementation enables installation of an AI-assisted reference design for smart cities that aims to achieve situational awareness and property security and management. It provides simple video analysis of streaming video sources (i.e., security cameras), captures inference data results using AI deep-learning models, and enabled a web-based user interface to view video streams, filter and display inference results, configure and manage video-source metadata, and plot video-source locations on a world map. Figure 1 illustrates the novel architecture details, leveraging only open-source technology through a microservices-based architecture using Docker containers.

Figure 1 Solution Architecture

- Using GStreamer multimedia framework [1], the media pipeline retrieves an H.264-encoded video stream from an RTSP source. Encoded video is split into an inference and fragmentation pipeline.

- Inference pipeline decodes video to raw frames which are used for inferencing via DLStreamer*[2](GStreamer plugin for OpenVINO’s Inference Enginer (OIE) [3]). DLStreamer tells OIE to load pretrained weights and model definitions, formatted to OpenVINO’s ‘intermediate representation’ optimized for Intel hardware. OIE uses incoming frames as input to models and uses their output to attach Regions of Interest. DLStreamer adds output labels and values based on a "model-proc" file (JSON configuration that adds pre/post-processing steps).

- Fragmentation pipeline fragments input video into segments suitable for HTTP Live Streaming (HLS). It synchronizes fragment timestamps with inference results, then indexes and stores them for playback, search, and analysis in a PostgreSQL database [4].

- Go [5] microservices provide APIs to configure camera metadata, search for video based on inference labels, and retrieve HLS playlists and video fragments.

- Angular Web UI [6] uses these APIs to present live streams, recorded video, inference results, camera configurations, and video-source locations plotted on a Grafana [7] world map.

- There are many media player frameworks; however, we found the HTML5-based VideoJS framework simplest and pivotal to display video.

- The pipeline can also post inference results via MQTT to listeners like EdgeX Foundry [8].

Challenges and Learnings

Edge Deployment Resource Constraints

In cases where the solution is deployed at the edge, storage and computational requirements are key constraints that need to be thoroughly considered. Since here video fragments are stored efficiently in the PostgreSQL database on premise, this edge device can quickly run out of storage space unless captured data is uploaded to a cloud server or to an external disk. Also, the solution should be hardware benchmarked for performance and memory utilization before installation to ensure hardware can support the application without any performance issues.

Security Hardening Practices and Data Privacy

Baseline security methods implemented include ensuring sensitive files are inaccessible to unauthorized users, TLS encryption, and following Vault’s baseline production hardening recommendations. Additional security recommendations of service authentication, encryption of RTSP URIs, and hiding sensitive information by default on the UI were also employed.

Data Management

Video data can be stored in the filesystem or within a database. This solution leverages PostgreSQL to store video feeds and related metadata because it:

- Simplifies the data abstraction layer

- Provides unified security and privilege management

- Provides useful admin advantages out-of-the-box

PostgreSQL uses declarative partitioning which treats video data as one large table, when in fact it is a virtual table partitioned by camera ID and then by time. It provides better performance and simplifies data-warehousing tasks like deleting recordings. This approach is agnostic to location of database server and can run fine alongside video-processing software or elsewhere in network (including cloud). Although this implementation expects a reliable database connection, code can be modified to store data on the filesystem and upload it to the database under other conditions. This would require consideration of security requirements, on-device data storage expectations, and video timestamping.

AI Ethics and Concerns

Increased proliferation of AI into society, as a whole, has begun to highlight some technology limitations, unintended use cases and/or consequences, and ethical concerns attendant to how AI applications would be best deployed. Therefore, working towards the design and development of AI capabilities should consider and evaluate projects based upon:

- Respecting rights, privacy and equity, inclusion, and security principles

- Enabling oversight

- Understanding AI-enabled decisions

Conclusion

Although new and innovative smart city use cases are proliferating, there are still challenges in implementing these solutions. This article provides an overview on the architecture, learnings, and challenges encountered during design and implementation of a Security-as-a-Service solution deployed at the edge leveraging open-source video analytics and AI/ML tools. Optimal AL/ML solutions can be built by focusing on customer-centric implementation problems, thus enabling faster development and industry adoption of CV-based solutions.

Lastly, as a moral responsibility, teams working towards design and development of advanced AI technologies should raise issues of ethical implications and ought to perform ethics principle-based evaluation of their projects.

References

- GStreamer: open source multimedia framework. GStreamer https://gstreamer.freedesktop.org/

- Dlstreamer. (n.d.). Dlstreamer/dlstreamer. https://github.com/dlstreamer/dlstreamer

- Inference Engine Developer Guide - OpenVINO™ toolkit. OpenVINO. https://docs.openvino.ai/2020.2/_docs_IE_DG_Deep_Learning_Inference_Engine_DevGuide.html

- Group, P. S. Q. L. G. D. (2022, August 2). PostgreSQL. https://www.postgresql.org/

- Build fast, reliable, and efficient software at scale. Go. https://go.dev/

- Angular. https://angular.io/

- Grafana: The Open Observability Platform. Grafana Labs. https://grafana.com/

- EdgeXFoundry. The Linux Foundation https://www.edgexfoundry.org/

To view all articles in this issue, please go to September 2022 eNewsletter. For a downloadable copy, please visit the IEEE Smart Cities Resource Center.

To have the eNewsletter delivered monthly to your inbox, join the IEEE Smart Cities Community.

Past Issues

To view archived articles, and issues, which deliver rich insight into the forces shaping the future of the smart cities. Older eNewsletter can be found here. To download full issues, visit the publications section of the IEEE Smart Cities Resource Center.