Human-AI Teaming for the Next Generation Smart City Healthcare Systems

Written by M. Abdur Rahman, M. Shamim Hossain, Ahmad J. Showail, and Nabil A. Alrajeh

We have been witnessing impressive advancements in healthcare provisioning in Smart Cities. Several technological advancements have contributed to this advancement, including the Internet of Medical Things (IoMT), medical big data, edge learning, and 6G. With the support of Artificial Intelligence (AI) capability at the edge, IoMT nodes, such as the CT Scan machine, can now do the diagnosis at the hospital edge nodes with very high accuracy and share the results with authorized medical personnel almost in real time. The massive amount of medical big data that are generated by IoMT devices each day is becoming unmanageable by humans. Hence, AI contributed to superior forecasting and prediction, emergency health operations and response, prevention of infection spreading, highly accurate medical diagnosis, treatment, and drug research capabilities.

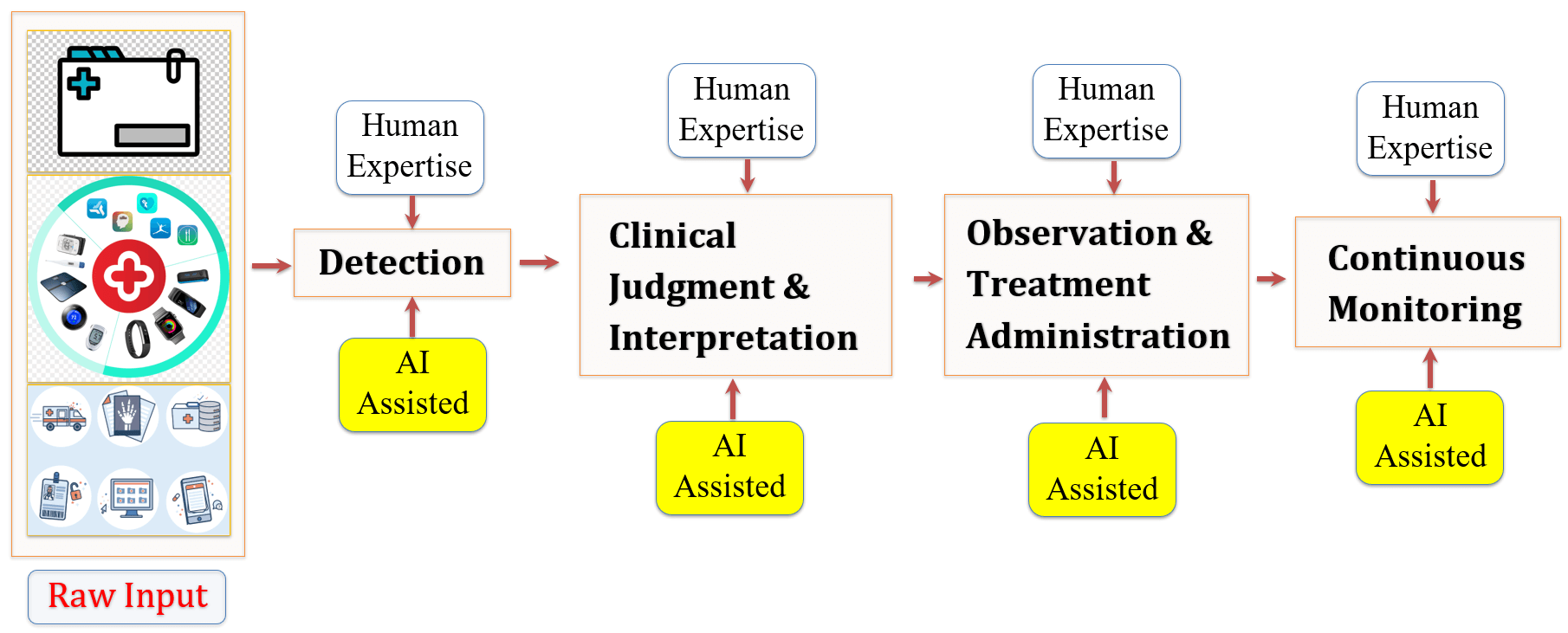

Figure 1. Potential AI-assisted clinical workflow.

One area in which AI excels is demystifying complex patterns from medical images. The speed and accuracy levels of quantifiable digital evidence from medical images complement medical doctors during their decision-making processes [1]. Moreover, AI allows aggregating multiple types of diagnosis results available from diversified types of electronic health and medical records such as genomics, radiological images, and pathological, physiological, and psychological data.

As shown in Fig. 1, AI has contributed to 4 high-level clinical processes:

For example, AI algorithms can localize and annotate suspected regions from medical images to lower observational oversights and assist in initial screening. AI algorithms can help early detection of lung cancer, incidental findings of asymptomatic brain abnormalities, robust screening mammography interpretation, prostate lesion detection from cancer imaging, and macular edema in fundus images. Once detected, AI algorithms can, for example in the case of cancer tumor cells, help in providing clinical interpretation of both intra- and inter-tumor heterogeneity, abnormality, growth rate, and variability. AI algorithms can also help in classifying abnormalities as benign or carcinogenic, categorizing tumors into cancer stages and associating tumor features with genomic data. Finally, capturing such a large number of discriminative tumor features would allow the AI monitoring process to spatially locate the physical spreading of the cancerous tumor cells, as well as temporally track the changes in the tumor cell characteristics as prognosis. AI can support clinical workflow with interventions at different stages of medical care. For example, in the case of oncological treatment, AI can assist human subject matter experts during radiological diagnosis of a mass lesion, histopathologic diagnosis, molecular genotyping, and determination of clinical outcome.

Despite the superiority of computing and medical data processing power, AI algorithms were not widely accepted by the existing smart city healthcare scenarios. This is because AI has not yet been able to support human-like interaction and context-aware responses to human queries. In fact, the new generation of AI models had passed its “black box” era and entered a new dimension of social acceptability. By incorporating explainability within the AI eco-system, AI researchers have been trying to combine the power of human intelligence and superiority of machine intelligence.

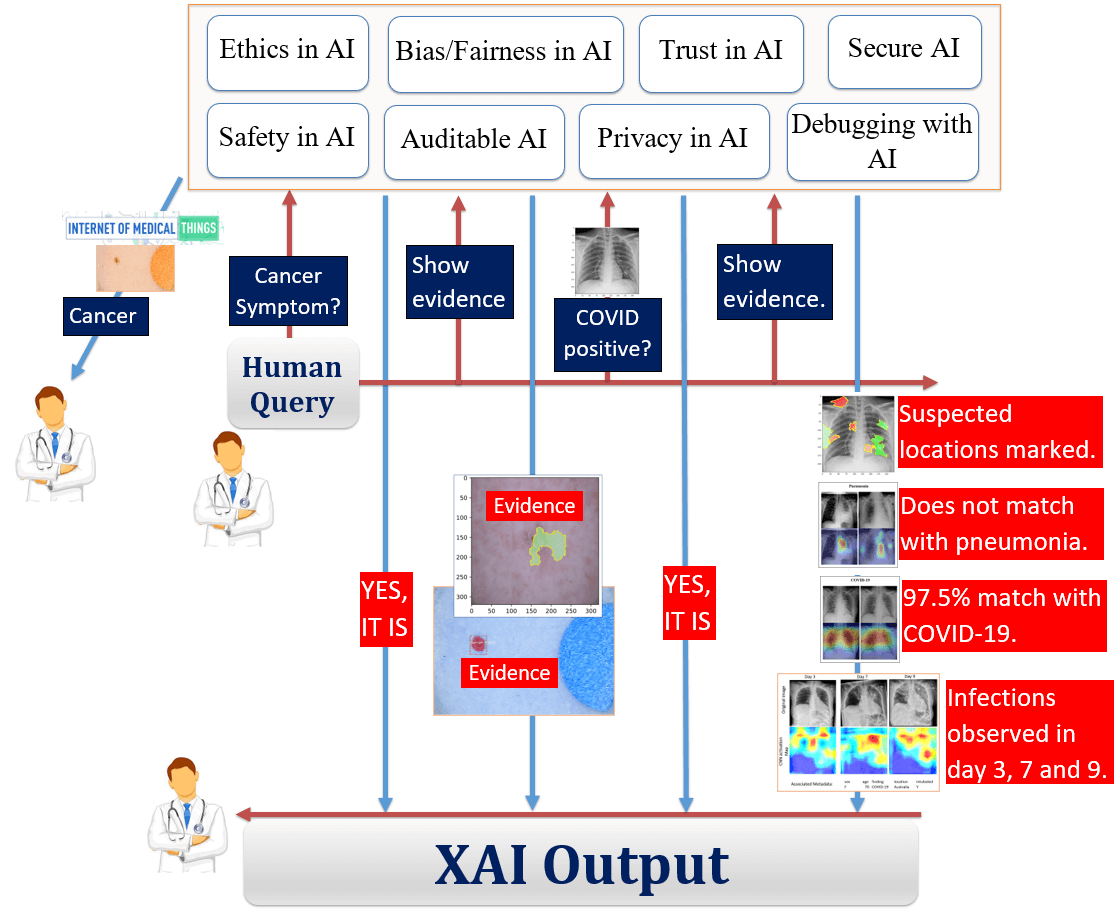

Human-AI teaming for smart city healthcare applications poses several challenges that need to be addressed. Healthcare domains in society are driven by human ethics, fairness, safety and security of the patients, financial regulations and insurance policies, privacy of the medical data, and auditing support in case there is any mistake by the medical doctors. By adopting diversified features such as ethics, non-biasness, trustworthiness, explainability, safety guarantee, data privacy, system security, and auditability, AI is becoming more sophisticated, and an increasing amount of decision-making is being performed via ‘human-AI teaming’ without compromising high performance. In the medical environment, every healthcare service provider understands the context of his/her patients and uses the diagnosis results and medical datapoints as evidence of the future treatment plan. In some cases, the intelligence of the medical doctor needs to be complemented, or even overruled, by AI cyber elements. To bring trust to the AI system, clinicians must be convinced through semantic insight and evidence of the underlying AI processes [2]. Making Explainable AI (or ‘XAI’) interpretable by healthcare stakeholders offers them a degree of qualitative, functional understanding, or what has been called semantic interpretations. Although some progress has been made towards the advancement of XAI, allowing healthcare stakeholders to understand XAI’s decision making process is a novel challenge [3].

What makes XAI appealing is that it would offer answers to queries such as “Would you trust the AI that advises invasive surgery based on the tumor image classification?” by presenting various types of evidence and diagnostic data points. Figure 2 shows a high-level scenario where AI will work with human actors as a team. We assume that the next generation of AI will have multimodal features embedded to support human-like ethics, avoid biasness toward dataset or inferencing, provide trust to its stakeholders, provide privacy and security of the underlying dataset or the model, allow debugging/auditing of the process, and make sure the safety of the AI model. Human queries are answered by the AI engine with the appropriate evidence. Steps in the treatment plan are illustrated just like one doctor works with another doctor and they share their mutual experience in deciding a treatment plan, thereby allowing doctors to work with AI counterparts side by side.

Figure 2 shows a human doctor – XAI interaction where the doctor reviews a melanoma skin cancer report generated by the XAI. After getting the query from the doctor, the XAI replies with the final inference from the skin cancer model. The human doctor can then question different aspects of the AI decision by sending queries to the engine to be convinced with the results. For example, the XAI engine replies with the marker on top of the cancerous area that was used in the decision-making process. Having a possible co-morbidity of COVID-19 infections, the doctor asks about the diagnosis results available from the X-Ray and CT Scan images. After returning a positive result, the XAI model presents 4 pieces of evidence that were used by the algorithm itself. First, it marked the suspected region of infection. Second, the algorithm did not find any similarity of this infection with existing pneumonia. Third, it found 97.5% similarity with the COVID-19 symptom database it has matched with. And finally, it presented the progression of the same patient’s days 3, 7, and 9 that play a key role in COVID-19 pathogen’s spreading pattern.

We envision a democratized XAI adopted by the smart cities where physical patients and the IoMT devices will have their digital twin managed by the XAI. Future smart cities will leverage XAI to offer innovative medical breakthroughs in telehealthcare, doctor on demand, remote medical diagnosis, and in-home personalized healthcare support, opening the door or ubiquitous human-XAI teaming in healthcare.

Figure 2. A scenario of the next generation smart city healthcare system, where AI will work with human actors as a team.

References

- W. L. Bi et al., “Artificial intelligence in cancer imaging: Clinical challenges and applications,” CA. Cancer J. Clin., vol. 69, no. 2, pp. 127–157, 2019, doi: 10.3322/caac.21552.

- M. A. Rahman, M. S. Hossain, N. Alrajeh, and N. Guizani, “B5G and Explainable Deep Learning Assisted Healthcare Vertical at the Edge COVID 19 Perspective,” IEEE Netw., 2020.

- J. B. Lamy, B. Sekar, G. Guezennec, J. Bouaud, and B. Séroussi, “Explainable artificial intelligence for breast cancer: A visual case-based reasoning approach,” Artif. Intell. Med., vol. 94, pp. 42–53, 2019.

This article was edited by Wei Zhang

For a downloadable copy of the June 2021 eNewsletter which includes this article, please visit the IEEE Smart Cities Resource Center.

To have the eNewsletter delivered monthly to your inbox, join the IEEE Smart Cities Community.

Past Issues

To view archived articles, and issues, which deliver rich insight into the forces shaping the future of the smart cities. Older eNewsletter can be found here. To download full issues, visit the publications section of the IEEE Smart Cities Resource Center.