Explainable Machine Learning for Secure Smart Vehicles

Written by Michele Scalas and Giorgio Giacinto

Vehicles are seeing their architecture revamped to enable autonomous driving and connect to the outside environment of Smart Cities, supporting vehicle-to-everything (V2X) communications. A significant part of the “smartness” of vehicles, such as computer vision capabilities, is enabled by Machine Learning (ML) models, which have proven to be extremely effective. However, the complexity of the algorithms often prevents understanding what these models learn, and adversarial attacks might alter or mislead the expected behavior of the vehicle; hence, undermining the capability of proper safety testing for deployment. For these reasons, there is a growing interest of the research community to exploit techniques for explaining machine learning models to help to improve the safety and security of smart vehicles [1].

Smart Vehicles and ML

Intuitively, the main component that gets benefited by ML algorithms is autonomous driving, which can also imply the coordination of a fleet of vehicles (platooning). We can think of the latter as a set of several ADASs (Advanced Driver Assistance Systems) with specialized roles. These can pertain to the external environment outside the vehicle (i.e., computer vision tasks), or to the vehicle cabin. In particular, the survey by Borrego-Carazo et al. [2] has highlighted four main ADAS tasks: a) vehicle and pedestrian detection, b) traffic sign recognition, c) road detection and scene understanding, and d) driver identification and behavior recognition. Besides ADAS-related applications, ML can be a tool for the management of the vehicle (e.g., for fuel prediction), and for assessing cybersecurity.

Deploying ML into the Real World

Different concerns must be considered when deploying ML-based systems. Typically, the tasks to perform are modeled through a wide variety of features that cover several characteristics of the entities to analyze. Apparently, this practice provides considerable detection performance. However, it hardly permits us to gain insights into the knowledge extracted by the learning algorithm. This issue is crucial since it negatively influences two main aspects of detectors:

- Effectiveness: Detectors might learn spurious patterns, i.e., they make decisions possibly on the basis of artifacts or non-descriptive elements of the input samples

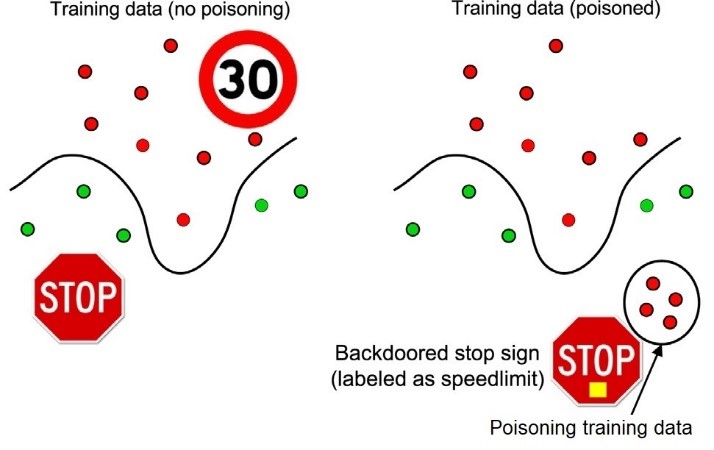

- Security: The learning algorithms might become particularly vulnerable to adversarial attacks, i.e., carefully perturbed inputs that overturn their outputs, causing, for example, a stop sign to be classified as a speed limit (Fig. 1).

For these reasons, relying on some kind of explanations about the logic behind such detectors reveals the truly characterizing features, therefore, guiding the human expert towards an aware design of them. Moreover, new legislation may enforce ML-based systems to be interpretable enough to provide an explanation of their decisions.

Figure 1: Example of a backdoored stop sign. Backdoor/poisoning integrity attacks place mislabeled training points in a region of the feature space far from the rest of training data. The learning algorithm labels such region as desired, allowing for subsequent intrusions/misclassifications at test time [3].

Explaining Smart Vehicles’ Behavior

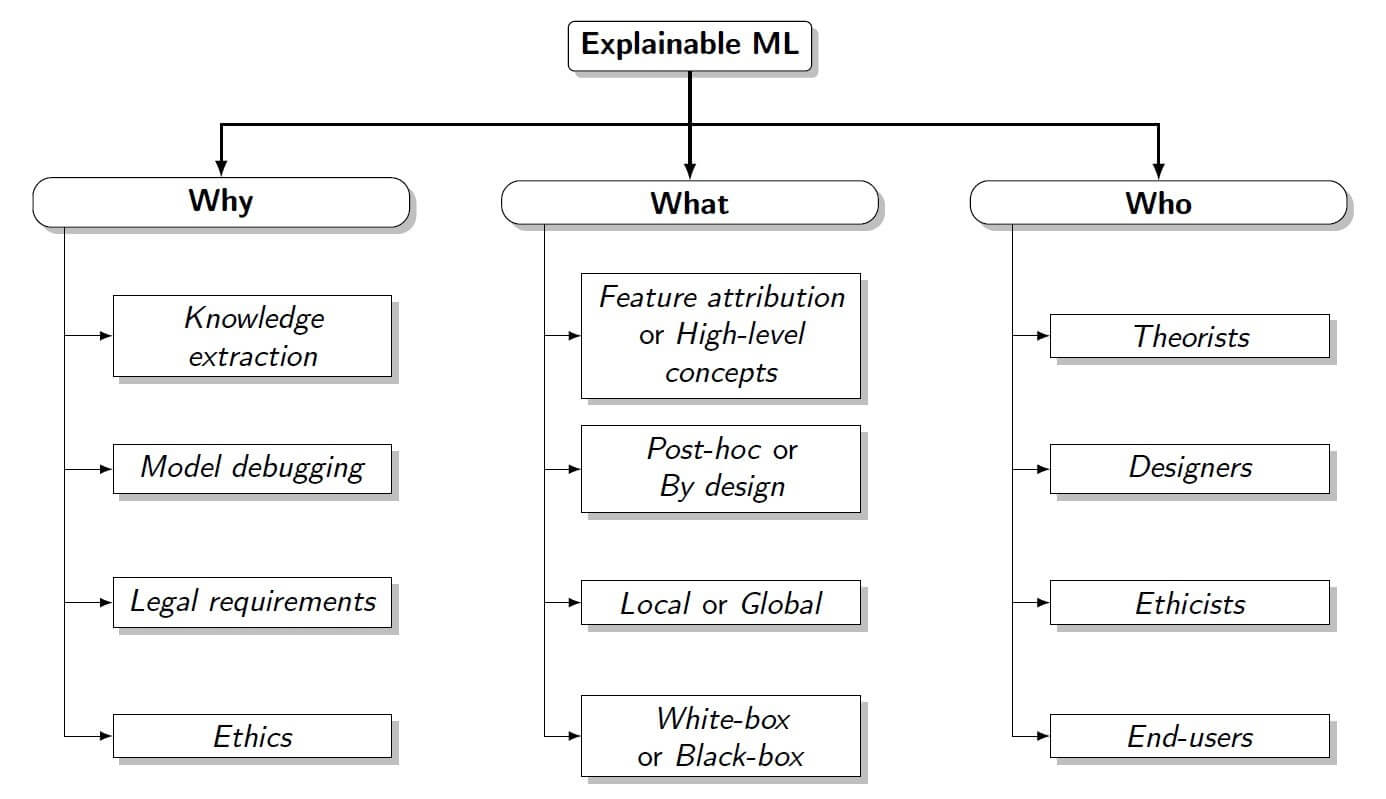

Explainable ML can assume different traits depending on several factors, such as why the explanations are produced, whom they are addressed to, what they consist of (see Fig. 2). For example, depending on the goal, explanations can be addressed to different stakeholders, such as:

Figure 2: Taxonomy of explainable ML under three criteria

When reasoning about the usage of explainable ML for smart vehicles, we can adopt two points of view, as smart vehicles can be seen both as human-agent systems — highlighting the interaction between digital systems and human users — and as CPSs (cyber-physical systems) — highlighting the interaction between cyberspace and the physical environment [1].

Human-agent Systems. The reason to produce and present explanations is gaining trust in the decisions adopted by the system. Since a modern vehicle ecosystem includes both safety- and non-safety-critical ML-based algorithms, for the former ones, it is crucial to provide explanations, preferably attained from interpretable-by-design models. For example, in the context of autonomous vessels, passengers may want to have feedback during boarding or dangerous situations [4]. In non-safety-critical cases, having explanations could be beneficial but not strictly necessary.

Cyber-physical Systems. The central aspect that emerges is the relationship between security in cyberspace and safety in the physical one. In a sense, “safety is concerned with protecting the environment from the system; security is concerned with protecting the system from the environment” [5]. While guaranteeing safety is a matter of applying strict, consolidated procedures, often coupled with certifications, security is a continuous process of adaptation of the defense mechanisms against novel attacks.

Impact of Explanations on Security

Given the above-described context, it is not straightforward to mark a clear distinction between cyber and physical space. Therefore, typical security measures from the IT world, e.g., cryptography and authentication, should be combined with ML-specific, comprehensive solutions.

Malware Characterization. Machine learning models are able to achieve remarkable accuracy performance for malware detection. However, due to newly discovered vulnerabilities and exploits, novel attacks show up at a swift pace. Consequently, there is a need to design systems that effectively detect new attack variants without increasing the false positive rate. In this sense, designers can leverage explanations to:

Adversarial Attacks. The other aspect to consider for security regards machine learning itself. That is the vulnerability to adversarial attacks. In this case, given the strict interaction of vehicles with humans and the environment, such attacks might occur through the physical space due to intentional deceitful behavior. It is fundamental to leverage proper techniques to:

In this sense, the role of explainable machine learning is ambiguous. On the one hand, inferring the global model behavior through explanations can help attackers generate a more effective attack. On the other hand, for the same reason, designers can leverage explanations to design models where only patterns that are both effective and descriptive of the problem are kept; thus, spurious patterns that represent vulnerable points for models are removed.

Confidentiality. As the last aspect, since explainability allows gaining information about ML systems, such information could be confidential. Consequently, using explanations could lead to violating privacy and intellectual property. This issue is particularly relevant in the automotive domain, where OEMs need to avoid any kind of industrial espionage.

Conclusions

Explainable machine learning can have a significant impact, both for designers and end-users. Accordingly, the research community has already started exploring the improvements its application can bring to the mobility domain, which requires considering both safety and security. At the same time, it is worth pointing out the need to be aware that explanations could also be employed as a way to disclose models’ confidential data or generate more effective adversarial attacks.

References

- Michele Scalas and Giorgio Giacinto. “On the Role of Explainable Machine Learning for Secure Smart Vehicles.” In: 2020 AEIT International Conference of Electrical and Electronic Technologies for Automotive (AEIT AUTOMOTIVE). Torino, Italy, Oct. 2020, pp. 1–6.

- Juan Borrego-Carazo, David Castells-Rufas, Ernesto Biempica, and Jordi Carrabina. “Resource-Constrained Machine Learning for ADAS: A Systematic Review.” In: IEEE Access 8 (2020), pp. 40573–40598. issn: 2169-3536. doi: 10.1109/ACCESS.2020.2976513. url: https://ieeexplore.ieee.org/document/9016213/.

- Battista Biggio and Fabio Roli. “Wild patterns: Ten years after the rise of adversarial machine learning.” In: Pattern Recognition 84 (2018), pp. 317–331. issn: 0031-3203. doi: 10.1016/J.PATCOG.2018.07.023. url: https://www.sciencedirect.com/science/article/pii/S0031320318302565.

- Jon Arne Glomsrud, André Ødegårdstuen, Asun Lera St. Clair, and OYvind Smogeli. “Trustworthy versus Explainable AI in Autonomous Vessels.” In: Proceedings of the International Seminar on Safety and Security of Autonomous Vessels (ISSAV) and European STAMP Workshop and Conference (ESWC) 2019. September. Sciendo, Dec. 2020, pp. 37–47. doi: 10.2478/9788395669606-004.

- Kate Netkachova and Robin E. Bloomfield. “Security-Informed Safety.” In: Computer 49.6 (June 2016), pp. 98–102. issn: 0018-9162. doi: 10.1109/MC.2016.158. url: http://ieeexplore.ieee.org/ document/7490310/.

- Michele Scalas, Konrad Rieck, and Giorgio Giacinto. “Explanation-Driven Characterization of Android Ransomware.” In: ICPR’2020 Workshop on Explainable Deep Learning - AI. 2021, pp. 228–242. doi: 10.1007/978-3-030-68796-0{\_}17.

This article was edited by Wei Zhang

For a downloadable copy of the June 2021 eNewsletter which includes this article, please visit the IEEE Smart Cities Resource Center.

To have the eNewsletter delivered monthly to your inbox, join the IEEE Smart Cities Community.

Past Issues

To view archived articles, and issues, which deliver rich insight into the forces shaping the future of the smart cities. Older eNewsletter can be found here. To download full issues, visit the publications section of the IEEE Smart Cities Resource Center.